Making AI Optimization Feel Natural

July 2-5, 2025 Development Update

I've been solving a tricky problem: how do you make AI-powered infrastructure optimization feel natural to use?

The last few days were all about taking our workspace architecture and making it actually work for real users.

Building on the Foundation

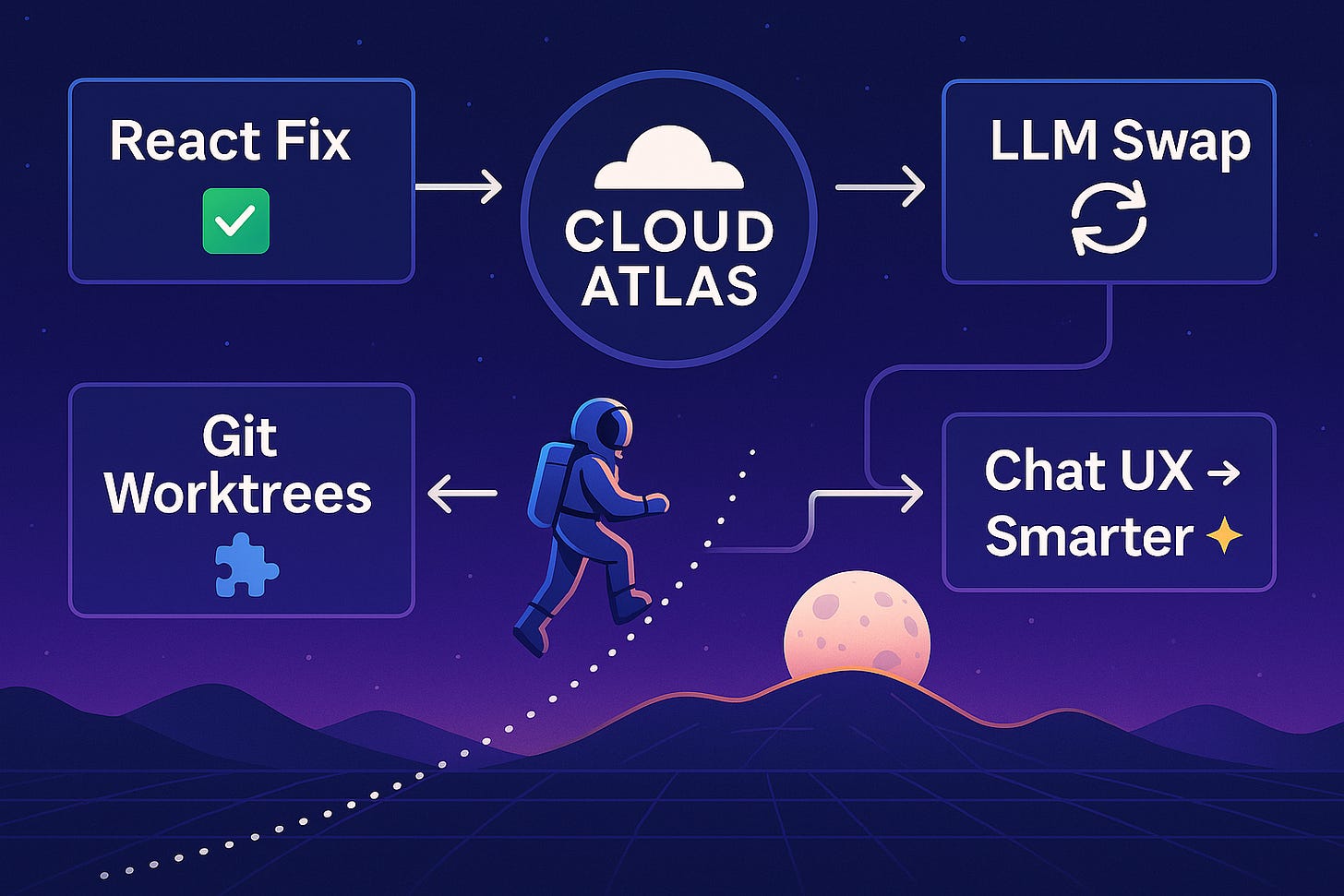

Last week, I wrote about the workspace architecture we built for Cloud Atlas. Each AWS project gets its own workspace. Each optimization candidate has its own process flow and conversation thread.

The foundation was solid, but the user experience needed work.

This week I focused on two key parts of that system:

Cloud Atlas Insight: The frontend where users interact with their optimization candidates

Flow: Our AI engine that handles the execution planning and LLM integration

Fixing the React Timing Issues

The biggest technical challenge was a React state timing issue. Users would select an optimization step, start chatting, but their messages wouldn't display correctly.

The problem was global streaming state. When multiple optimization candidates were active, messages from different steps would conflict and create race conditions.

I fixed this by creating isolated streaming contexts for each step. Now each optimization candidate has its own conversation thread that doesn't interfere with others.

This sounds technical, but the user impact was huge. Before, people would type messages and watch them disappear. Now the chat flows smoothly.

Making the Interface Guide Users

The workspace architecture works great for organizing optimization workflows. But users were getting lost.

They'd start typing before selecting which optimization they wanted to work on. They'd lose track of which execution step they were on.

The biggest fix was making chat input conditional - it only appears after you select a specific optimization step. This solved the main confusion about when users could start interacting with the system.

These aren't flashy features. But they make the difference between someone understanding your product and giving up.

Continuing the Backend Work

While fixing the UI, I was also breaking apart our backend. The main service file had grown to 3,125 lines. Finding anything took forever.

I split it into focused modules:

Chat handling for each optimization candidate

File operations for Terraform assets

Plan generation with LLM integration

Git isolation so candidates don't interfere with each other

The main file is now 148 lines. When I need to fix something, I know exactly where to look. And when AI code agents help with development, they can actually understand the codebase structure instead of getting lost in massive files.

LLM Provider Abstraction

One interesting challenge was making our system work with different AI models. Right now we use Claude, but users might want GPT-4 or other models for different optimization types.

I built a provider factory system that abstracts away the specific LLM. The workspace architecture doesn't care which model is generating the optimization plans - it just needs them in the right format.

This also made testing much easier. Instead of calling real AI models during tests, we can use mock providers that return predictable responses.

Git Worktree Magic

Here's something I'm proud of: each optimization candidate now gets its own isolated git environment.

When you're optimizing infrastructure, you might have 20+ candidates running simultaneously. Each one needs to modify Terraform files, run tests, check results. Without isolation, they'd step on each other.

We use git worktrees to give each candidate its own workspace. They can all work in parallel without conflicts. When an optimization succeeds, we merge the changes back to main.

Performance Improvements

I also sped up the chat experience. Message streaming is now 2.7x faster, and I reduced the content length by 60% while keeping all the important information.

Users don't want to read paragraphs of explanation for simple optimizations. They want to know what's changing and why, then move on to execution.

Current Status

The UI flow is much better. Users can navigate through optimization workflows without getting lost, though I'm still refining the experience.

The backend architecture is in place - each optimization gets its own isolated git environment. But I haven't tested it with hundreds of simultaneous optimizations yet. That's still ahead of me.

Right now I'm working on the integration piece: making sure when someone approves an optimization plan in the UI, our Flow service executes exactly what they expect.

This involves a lot of testing. Edge cases where the AI suggests something unexpected. Scenarios where infrastructure changes don't go as planned. Making sure rollback procedures work correctly.

The Reality of AI Integration

Building with AI isn't like building with traditional APIs. APIs are predictable. You send the same input, you get the same output.

LLMs are different. Your UI might expect a simple optimization plan, but the AI might suggest rewriting your entire infrastructure. Or it might give perfect technical advice that's impossible to execute safely.

We're still working through these integration challenges. Testing each type of optimization. Making sure the AI responses fit the workspace flow we designed.

What's Next

The architecture is solid. The UI guides users smoothly. The backend can scale.

Now it's about refinement. Making sure every optimization type works reliably. Handling the edge cases where AI and infrastructure don't play nicely together.

This is the unglamorous part of building with AI. Less about the amazing things it can do, more about making those things work consistently for real users.

Following our progress? We post regular updates about the real challenges of building AI-powered infrastructure tools.